CS180 Project 1

Colorizing the Prokudin-Gorskii photo collection

Task 1: Exhaustive Search

For smaller images, we can use an exhaustive search to try all possible “offsets”, in which we shift an image in the x or y direction, within a range to find an optimal alignment for overlaying the three images together. The pseudocode is pretty straightforward:

- Select one image as the reference image. We use the B channel here.

- For all other images, perform an exhaustive search in the range -N to +N pixels for the x and y direction. We use N=20 here.

- Shift the image by the specified displacement, and use some sort of metric to determine the quality of this alignment. Record the highest score. d We tried three different metrics, with varying runtimes and effectiveness:

In addition to this, we also cropped images before applying the exhaustive search. This is because each image had black borders around the outer edges which heavily influenced the metrics, as it would optimize trying to align the black pixels with each other as a way to improve the score. Taking the center 80% of the image, removing the borders, improved image quality heavily. We then use the displacement that we get from the cropped images and apply it to the uncropped images, to get the final result:

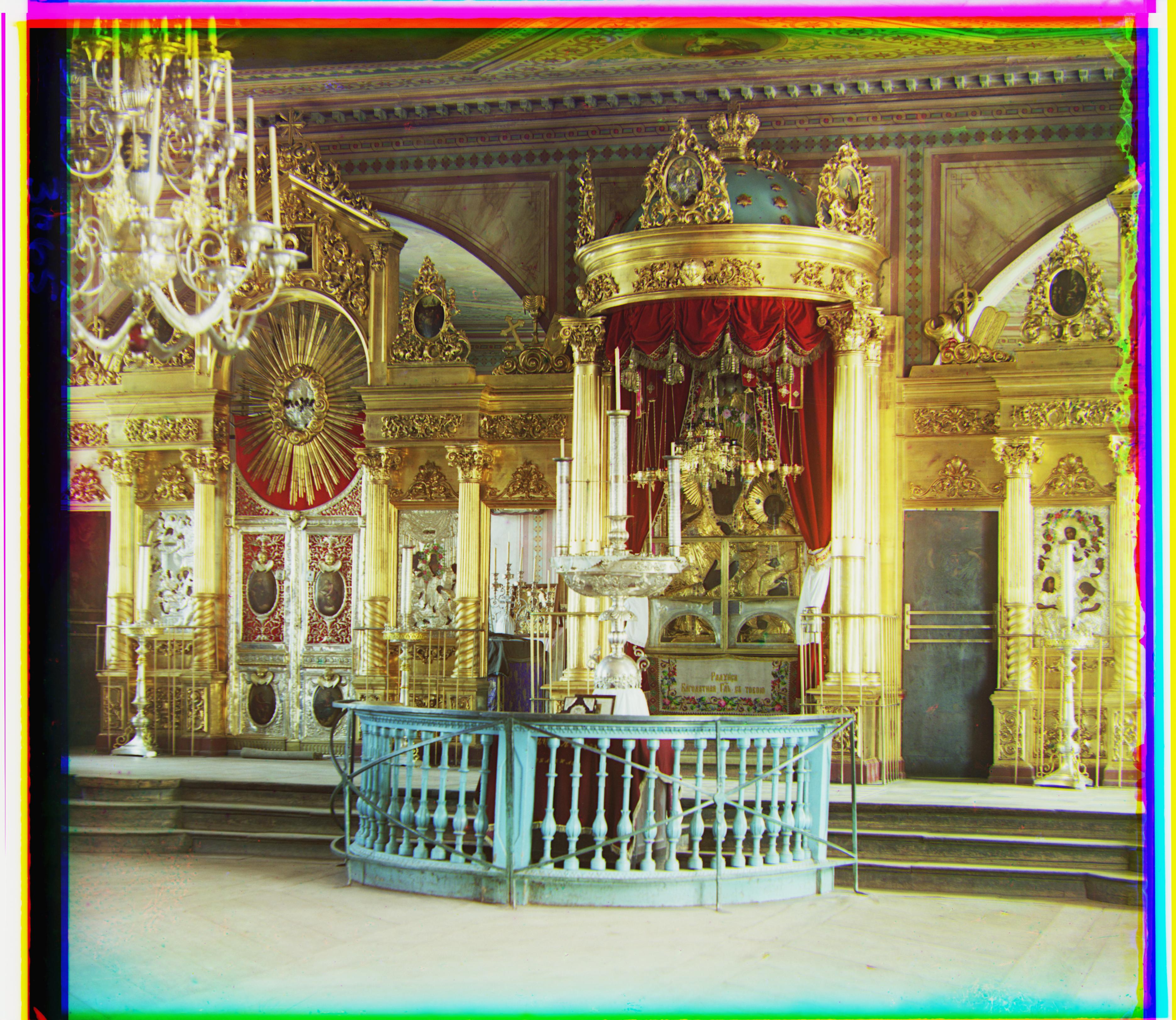

r displacement: (12, 3)

g displacement: (5, 2)

r displacement: (3, 2)

g displacement: (-3, 2)

r displacement: (6, 3)

g displacement: (3, 3)

Task 2: Image Pyramid

With larger images however, an exhaustive search would take too much time. After all, it’s an N^2 runtime. Some images had an optimal offset in the 100s, and increasing the search range to 100 would require 40000+ calls to normalized cross correlation for each alignment.

Therefore, we’ll use an image pyramid in order to speed up the runtime, with little sacrifice in how we run the search.

- First, we scale down the image in factors of 2 until we reach a low enough resolution. Here, we specify this as having a width or height below 360 pixels.

- We run our exhaustive search algorithm on this scaled down image, with a search range of -36 and 36. This gives us the optimal displacement for this scaled down image.

- Take the current optimal displacement and double it in both directions. Halve the search range and scale up the image by 2x in each direction and rerun the exhaustive search, searching in the range centered around our current optimal displacement.

- Repeat step 3 until we reach the original image’s resolution, and return the optimal displacement.

As it’s much faster to compute the metric when our image is small, this method is way faster and still allows us to search a huge range of our image for an optimal displacement.

For the large images in particular, cropping was essential. Cropping away 20% of the edges ensured that only the important center areas of each image was used to determine if an alignment is appropriate, resulting in images with far better alignments. Cropping also had the secondary effect of reducing the image size, reducing the number of times we need to scale down.

The results were promising, and each image took at most 40 seconds to align.

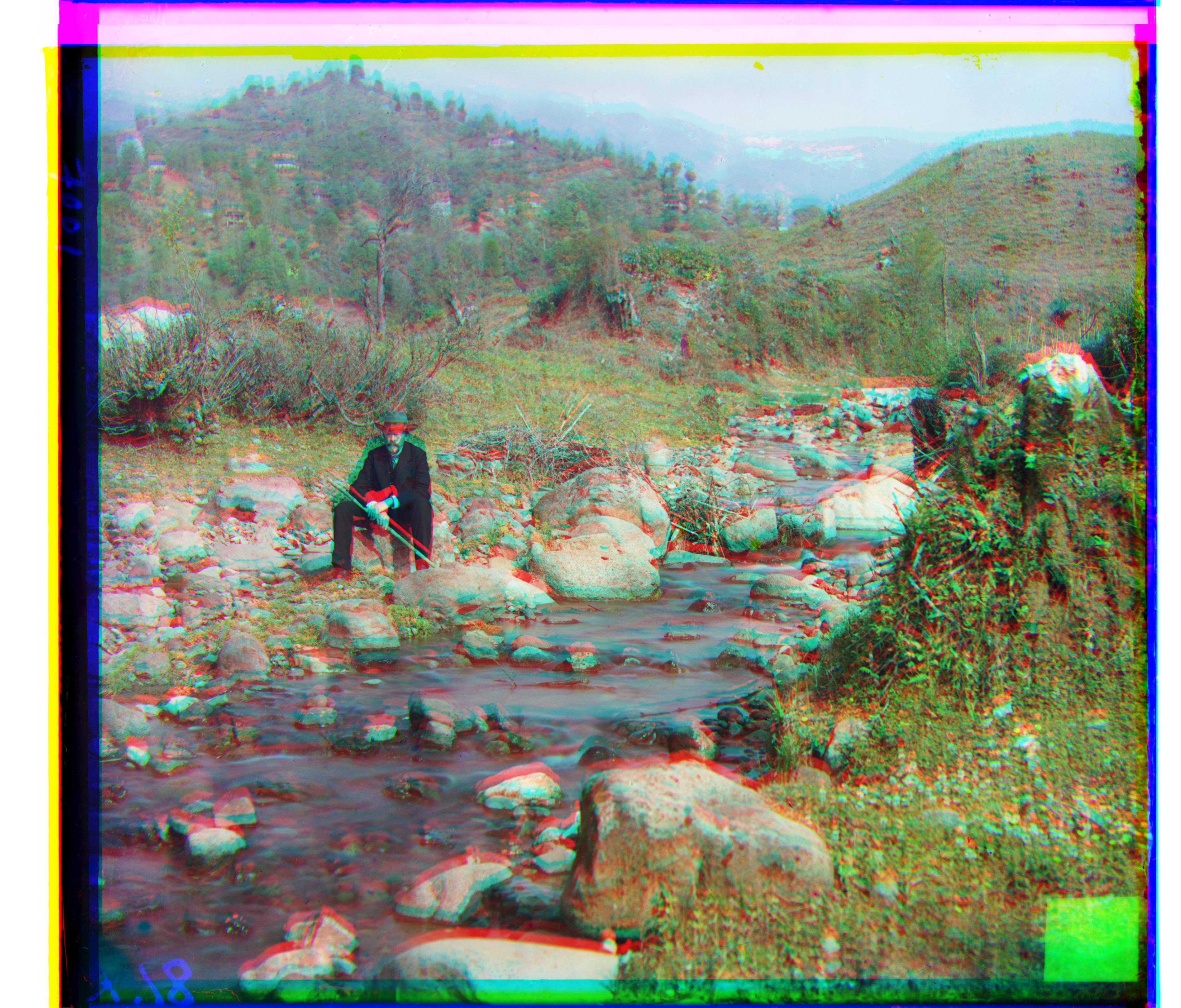

r displacement: (59, -4)

g displacement: (25, 4)

r displacement: (103, 55)

g displacement: (49, 24)

r displacement: (124, 13)

g displacement: (59, 16)

r displacement: (89, 23)

g displacement: (41, 17)

r displacement: (112, 11)

g displacement: (51, 9)

r displacement: (58, -4)

g displacement: (25, 4)

r displacement: (139, -26)

g displacement: (33, -11)

r displacement: (130, -26)

g displacement: (33, -11)

r displacement: (141, 33)

g displacement: (78, 29)

r displacement: (112, 11)

g displacement: (53, 14)

r displacement: (87, 32)

g displacement: (42, 5)